How far should we let AI go?

There’s a growing campaign to make sure society comes out a winner

The transformative power of artificial intelligence has come to preoccupy big business and government as well as academics. But as AI’s potential sinks in, a growing number of policy experts — along with some leading figures in technology — are asking tough questions: Should these cutting-edge algorithms be regulated, taxed or even, in certain cases, blocked?

Consider what AI can do in the workplace.

For example, managers realize that office politics, stress and other pressures take a toll on employees. They also know that standard-issue job-satisfaction surveys “don’t provide a true gauge of what’s going on” around the water cooler or in the staff lunchroom, says Jonathan Kreindler, Chief Executive Officer of Receptiviti.ai.

To tap into more candid expressions of employee sentiment, his company, a three-year-old Toronto-based startup, has created an AI algorithm grounded in the research of James Pennebaker, a University of Texas social psychologist who has found that the way employees communicate with each other can provide insight into their behaviour and state of mind.

So, the Receptiviti algorithm scans internal messages for particular words and expressions that Pennebaker says indicate dissatisfaction.

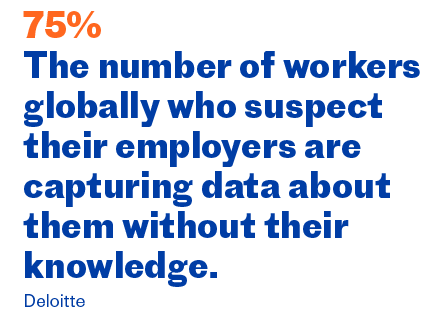

Anyone who works for a large organization will know their emails are not their own; nonetheless, Receptiviti’s service raises questions about privacy, even though Kreindler is quick to point out its goal is simply to find out how employees really feel: “We’re not reading emails to understand what people are talking about.”

He also stresses that “there are no regulations preventing us from doing what we’re doing.” Still, employees may wonder what’s really going on, and firms such as Wall Street’s Goldman Sachs, he adds, have already been called out for scanning emails for certain keywords.

Does computing need human oversight?

Even with the best intentions, a growing number of firms like Receptiviti find themselves thrust into the increasingly high-profile debate about the social implications of AI.

Previous waves of digital development have triggered dialogues about everything from Internet regulation to online bullying and censorship, but AI seems to have raised the stakes. After all, it points to a future in which computing doesn’t necessarily require human oversight.

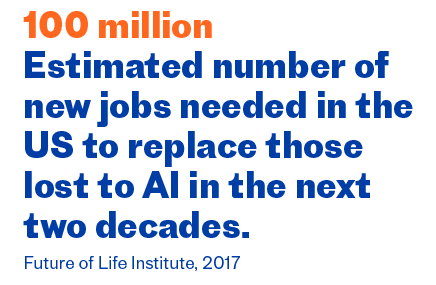

Some critics, as well as AI experts themselves, have focused on the mid-term impact on employment. An alarming loss of jobs has been predicted based on the implementation of AI-driven systems, including the replacement of everyone from call-centre staff to lawyers.

Personal privacy isn’t the only source of friction, says Joe Greenwood, a specialist in data analysis at MaRS Discovery District in Toronto.

Other sensitive areas range from the quality of the data that is used by AI to what happens as robotic devices replace human beings.

In the latter case, even Microsoft founder Bill Gates has suggested special taxes and a slower pace of adoption while authorities ask themselves, as he said in one interview, “Okay, what about the communities where this has a particularly big impact?”

Consent is another issue, Greenwood says. AI systems rely on a variety of sources of consumer data, but have the consumers given their permission to having personal information combined with less sensitive statistics?

Another major concern is reliability. For example, algorithms are being developed to read and interpret legal contracts and make certain types of medical diagnoses (such as skin cancers).

“Are we confident enough that [the algorithm] has done that well?” Greenwood asks. “Or are we taking it on blind faith?”

As for human oversight, he points out that medical staff no longer double-check the readings of heart monitors; the same could well happen with diagnostic AI.

And what if the AI has bad data? What if a person unjustly runs afoul of a facial-recognition device or “predictive policing,” which is designed to anticipate whether people will be victims of crime, or commit it?

Writing in TechCrunch last year, Kristian Hammond, a Northwestern University computer scientist, identified several types of algorithmic bias, some of it absorbed from the human actors who interact with AI. (One oft-cited example: Tay, an AI-driven Twitter account created by Microsoft, became so aggressively racist and misogynist when let loose online that it was shut down within 24 hours.)

Calls for regulatory oversight have begun.

The high-profile tech figures expressing concerns about machine learning are led by Tesla’s Elon Musk. Early this year, he spearheaded a high-level effort to develop guiding principles for intelligent automation through the Future of Life Institute, a Boston think-tank that sponsors research on promoting a safe future for humanity. Dozens of leading researchers and entrepreneurs — such as physicist Stephen Hawking, Skype co-founder Jaan Tallinn, and Montreal AI researcher Yoshua Bengio — put their names to the Asilomar Principles, a list of 23 tenets they consider a necessary ethical foundation for this technology (Asilomar is the California conference centre where the summit took place).

How can we hold AI accountable?

As Greenwood puts it, the principles pose the question: Do AI-based systems require accountability mechanisms if something goes wrong with the way the technology functions?

As well, AI Now, a New York University-based think tank founded by data scientists Kate Crawford and Meredith Whittaker, holds annual conferences on a range of issues related to machine learning. This summer, the group teamed up with the American Civil Liberties Association to identify algorithmic bias in AI-affected fields as disparate as money lending and parole rulings.

North of the border, the Canadian Institute for Advanced Research (CIFAR), which funds the newly established Vector Institute through the federal $125-million Pan-Canadian AI Strategy, promotes AI and has the additional mandate to investigate its social, legal and ethical implications, says Brent Barron, CIFAR’s director of public policy.

Barron says CIFAR is tracking the debates taking place within AI as it develops a policy framework for Canadian research and development, and will convene advisory panels to scope out what should be addressed. But he also says “it’s early days” and, for now, many AI startups will have to play cat-and-mouse with the evolving regulatory environment in jurisdictions across the country.

Kathryn Hume, vice-president of products and strategy for Toronto-based startup Integrate.ai, says her company has done this already. Founded less than a year ago, Integrate is testing a machine-learning system that draws on disparate data pools maintained by large companies with extensive consumer bases. The algorithm is meant to come up with solutions that aim to optimize customer engagement.

The underlying idea — how can we keep our customers happy by analyzing data patterns — seems straightforward enough. But Hume points out that, when the algorithm also draws on third-party sources of data, such as credit scores, to develop conclusions, it immediately confronts questions about privacy and consent.

Technically, she says, the company will erect a “mathematical wall” between the analysis and the underlying data — an emerging concept known as differential privacy — because it is seeking any patterns that data contains, not anything associated with any particular individual.

Despite those assurances, Hume points out that, in such regions as the European Union, regulatory authorities have already begun to promulgate AI-related policies, such as right-to-know laws and policies mandating AI firms to be prepared to explain just how their algorithms generated particular outcomes.

At the moment, she says, the difficult questions about the implications of AI adoption outnumber the answers: “The legal community and the regulators have a lot of work to do to figure out what all this means.”

Guiding principles for AI

The Future of Life Institute says the Asilomar Principles, the following 23 measures to guide the advent of the intelligent machine, “offer amazing opportunities to help and empower people in the decades and centuries ahead.” Adopted by delegates to the institute’s annual gathering this year at the Asilomar conference centre in Monterey, Calif., they fall into three main categories:

Research

1. The intelligence created should be beneficial.

2. Investments should be accompanied by funding to ensure AI is used well, including such thorny questions as how we can prevent systems from malfunctioning and increase prosperity while maintaining people’s resources and purpose.

3. There should be a constructive exchange between AI researchers and policy-makers.

4. A culture of co-operation, trust and transparency should be fostered among AI developers.

5. Teams developing AI should strive to work together and not cut corners on safety.

Ethics and values

6. AI should be verifiably safe and secure, where feasible.

7. If a system causes harm, it should be possible to ascertain why.

8. Any involvement by an autonomous system in judicial decision-making should provide a satisfactory explanation auditable by a competent human authority.

9. Creators of advanced AI have a stake in its use and misuse, and are responsible for its outcomes.

10. AI’s goals and behaviour should align with human values.

11. It should be compatible with ideals of human dignity, rights, freedoms and cultural diversity.

12. People should have the right to access, manage and control the data they generate, given AI’s ability to analyze and utilize that data.

13. The application of AI to personal data must not unreasonably curtail personal liberty.

14. AI should benefit and empower as many people as possible.

15. Prosperity created by AI should benefit all of humanity.

16. Humans should choose how and whether to delegate decisions to AI systems, to accomplish human-chosen objectives

17. Rather than subvert, AI should respect and assist the processes on which a healthy society depends.

18. An arms race in lethal autonomous weapons should be avoided.

Long term

19. We should avoid strong assumptions regarding upper limits on AI capabilities.

20. AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources.

21. Risks posed by AI, especially those that are catastrophic or existential, must be subject to planning and mitigation efforts commensurate with their expected impact.

22. AI designed to improve or reproduce itself rapidly must be subject to strict controls.

23. Superintelligence should be developed only in the service of widely shared ethical ideals, and for the benefit of all humanity.

Adapted from futureoflife.org/ai-principles

John Lorinc

John Lorinc